Fine-tune a Mixture of Experts on Your Computer

Cheap supervised fine-tuning for MoEs with QLoRA

There is a wave of mixture of experts (MoE) and merged models currently surging on the Hugging Face Hub. In a previous article, we reviewed Phixtral, a merge of several Phi-2 LLMs, and made our own MoE.

While these new LLMs are simply merging several LLMs, they often outperform, without any fine-tuning, the individual LLMs that they merged.

In this article, I first discuss the memory requirements for fine-tuning MoE/merged LLMs and then show how to fine-tune them using QLoRA. For demonstration, I use my Maixtchup model but it would work the same for any other MoE models made of Llama 2 or Mistral 7b models.

I have implemented this fine-tuning in the following notebook:

You can get Maixtchup from the Hugging Face Hub:

How Much Memory Do We Need to Fine-tune 4xMistral 7B?

Maixtchup is a 24 billion parameter model. Fine-tuning it on consumer hardware is challenging.

One fp16 (16-bit) parameter occupies 2 bytes in memory. 24 billion fp16 parameters occupy 48 GB. Even an expensive A100 48GB GPU wouldn’t be enough to load the entire model. To load it on a consumer GPU (e.g., with 24 GB of VRAM), we could offload 3 of the 4 experts to the CPU RAM but then fine-tuning would become extremely slow.

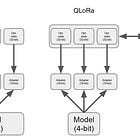

The best alternative is to quantize the model to 4-bit and then fine-tune an adapter over the model. This is the QLoRA method. If you don’t know about QLoRA, we used it several times in the previous articles and you can find a detailed explanation of how it works here:

With 4-bit quantization, one parameter only occupies 0.5 bytes. It divides by 4 the size of the model in memory. We only need 14 GB (not 12 GB since not all the parameters are quantized) to load a quantized Maixtchup.

So we need a GPU with 14 GB, but we still don’t know how much memory the fine-tuning itself will require. It depends on several hyperparameters, mainly the training batch size and the maximum sequence length of the training examples.

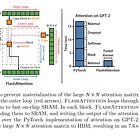

Since we will fine-tune the model on long training examples (see next section), I set the maximum sequence length to 1,024. I will also activate FlashAttention 2 to reduce the computational cost and memory consumption of training over long sequences of tokens.

It remains to know the memory cost given the batch size. To know this, I tried to run fine-tuning with different batch sizes.

If you target a 24 GB GPU (RTX 3090/4080/4090), the maximum batch size would be 3 but for training efficiency, it is recommended to use a value that can be divided by 2. So I set 2.

If you target a 16 GB GPU (RTX 4060 Ti/4070 Ti Super), even setting a batch size of 1 would still consume too much memory. You will need to change the maximum sequence length to 512 and then a batch size of 2 would work.

Distilled Supervised Fine-tuning

This supervised fine-tuning (SFT) aims to make a chat model using Maixtchup as a base model. We want this chat model to be able to answer instructions. We need an instruction dataset for training: instructions paired with a correct answer.