Hardware for LLMs

This guide recommends computer parts to fine-tune and run LLMs on your computer.

In this first version, I only recommend consumer GPUs, CPU RAM, CPUs, and hard drives. I’ll update this page regularly.

This guide is organized as follows:

The most important computer parts for running LLMs

Graphic card (GPU)

CPU RAM

CPU

Hard drive

How to Make a Custom Hardware Configuration that Works

Recommended Configurations per Model Size

Up to 2B parameters

Up to 7B parameters

Up to 13B parameters

13B+ parameters

Cloud Computing with Notebooks

Paper Space

Google Colab

Note: I use Amazon affiliate links to recommend computer parts.

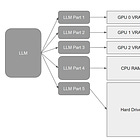

The most important computer parts for running LLMs

Graphic card (GPU)

In some of my articles, we have seen that we can run LLMs without a GPU. For instance, we can load and run Falcon-180B using only CPU RAM. If you don’t plan to do batch decoding, you don’t need a GPU.

However, if you want to do batch decoding or fine-tune LLMs, then a GPU is (almost) necessary. Fortunately, we will see that cheap GPUs are often enough to run billion parameter models.

The quantity of memory (VRAM) and the architecture are by far the most important characteristics of a GPU for running LLMs.

You can install several GPUs in a consumer computer (often up to 2). You just have to be careful that you have enough space in your computer case and that your power supply can deliver enough watts.

I will only suggest NVIDIA GPUs. AMD and Intel GPUs are also capable of running LLMs but my knowledge of their architecture is too limited to make any recommendations.

CPU RAM

CPU RAM is cheap and that’s good news because you will need a lot of it. If you look online at CPU RAM you will see that there are a lot of characteristics for CPU RAM that people (especially gamers) care about: voltage, latency, timings, frequency, memory type (DDR4, DDR5, …)…

For LLMs, you only need to care about the amount of memory. Other characteristics will have little impact, especially if you use a GPU.

A good and popular rule-of-thumb is to have twice more CPU RAM than VRAM. One of the reasons is that when we load Pytorch pickle models, we create an empty tensor and then load the model’s weights in memory before transferring the model to the GPU. This consumes twice as much CPU RAM as VRAM.

If you use safetensors, then the model would be loaded directly on the GPU.

Nonetheless, you should expect to have to deal with Pytorch pickled models which is the format used by the large majority of LLMs. For your peace of mind, and because it’s cheap, consider setting up twice as much CPU RAM as VRAM.

If you plan to run extremely large LLMs without a GPU, then you will need a lot of CPU RAM.

CPU

Unless you will run LLMs without a GPU, the choice of the CPU won’t have much impact. Both AMD and Intel CPUs are good choices.

However, if you plan to process huge datasets or consume a large quantity of CPU RAM, then you should choose a high-end CPU with a lot of cores.

Hard drive

You should have both mechanical and SSD hard drives.

You definitely want to run your LLMs on the SSD, especially if you have to offload some parts of your models due to a lack of VRAM/CPU RAM.

I recommend SSDs type M2. M2 ports are much faster than SATA but their number on the motherboard is more limited.

I recommend SSDs with at least 1 TB of memory, for instance:

You also want a large mechanical hard drive to store your checkpoints and do some local versioning of your model. I recommend 8 TB to start with, for instance:

Computer cases and motherboards can host several hard drives so you can easily extend your storage with more hard drives later.

How to Make a Custom Hardware Configuration that Works

Whether you are building a new machine from scratch or upgrading one, you should check that all the computer parts that you have are compatible with each other. Here are some questions that you will have to answer:

Can the power supply support this configuration?

Is this CPU/CPU RAM compatible with the motherboard?

Can these 2 GPUs fit into this computer case?

You could look at the documentation of all the computer parts to answer all these questions by yourself.

I use pcpartpicker. It can detect potential compatibility issues and also helps to design your machine.

Recommended Configurations per Model Size

Depending on the size of the models you plan to manipulate, the cost of the configuration that you will need will greatly vary.

Given a model size, I picked computer parts that I think have the best value now.

One billion fp16 parameters roughly occupy 2 GB of memory. With x being the number of billion parameters in the model, we need at least 2*x GB of memory to load the model. Then, taking into account some memory overhead and the fact that we want the possibility to do batch inference/fine-tuning, I increase the required memory size by 25%, and by 50% for the recommended memory. For instance, for Llama 2 7B (so x=7), this method yields:

Minimum Memory for a 7B parameter model

2*7+(2*7*0.25)=17.5 GB

Recommended Memory for a 7B parameter model

2*7+(2*7*0.5)=21 GB

It means that for fully fine-tuning Llama 2 7B you will need at least 17.5 GB of VRAM. Note that it’s a minimum. Fine-tuning Llama 2 7B with only 17.5 GB is feasible only with tiny batch sizes. With 21 GB of VRAM, you can do inference with longer prompts and larger batch sizes.

Divide this memory requirement by 4 if you plan to only run/fine-tune 4-bit quantized models (so only requiring 7GB of VRAM for Llama 2 7B, which also allows for a much larger batch size).

Up to 2B parameters

Examples of models: facebook/opt-1.3b, microsoft/phi-1_5

2B parameters occupy 4 GB of memory, or 2 GB if quantized to 4-bit. You need a GPU with 6 GB of VRAM and 12 GB of CPU RAM.

The price difference between GPUs with 6 GB and 8 GB is not really significant. I recommend choosing among two GPUs: RTX 3060 or RTX 4060 with 8 GB of VRAM, which are both under $350. The RTX 3060 is older, cheaper, and slower, but I think it is still a good deal.

Asus Dual GeForce RTX™ 3060 White OC Edition 8GB GDDR6 (budget)

GIGABYTE GeForce RTX 4060 Eagle OC 8G (recommended)

With this GPU, opt for 16 GB of CPU RAM.

Or if you have a motherboard supporting the DDR5: CORSAIR VENGEANCE DDR5 RAM 16GB (2x8GB)

You don’t need a powerful CPU to support this configuration.

If you plan to process a large dataset, I recommend this one which has more cores (so better parallelization capabilities):

Up to 7B parameters

Examples of models: EleutherAI/gpt-j-6b, meta-llama/Llama-2-7b-hf, mistralai/Mistral-7B-v0.1

7B parameter models are now very popular but they are at the limit of what consumer GPUs can handle (without any compression/quantization).

You need a GPU with more than 16 GB of VRAM.

High-end consumer GPUs have a maximum of 24 GB of VRAM, so you have two choices here: The RTX 3090 24 GB or the RTX 4090 24 GB. You can find a refurbished 3090 between $1,000 and $1,500. The 4090 is 50% more expensive (new) but significantly faster. I recommend the following:

However, if you plan to only use quantized models, then 16 GB, 12 GB, or even 8 GB of VRAM would be enough. You can get the RTX 3060 12 GB but, as an owner of this card, I can tell you that it feels quite slow compared to more recent GPUs. The RTX 4060 16GB is now a much better deal:

32 GB or 64 GB of CPU RAM is enough to support this configuration.

Corsair VENGEANCE LPX DDR4 RAM 32GB (budget)

Corsair VENGEANCE LPX DDR4 64GB (2x32GB) (recommended)

For this configuration, you want a CPU that can process that much CPU RAM fast:

13B parameters

Examples of models: meta-llama/Llama-2-13b-hf, facebook/opt-13b, EleutherAI/pythia-12b-deduped

With this many parameters, we are over the limit of what consumer GPUs can handle. At the minimum, you would need a GPU with 32 GB of VRAM. A GPU with more than 40 GB will be necessary for a stress-free experience (long prompt, large batches).

Professional GPUs with that much VRAM are expensive.

If you quantize the 13B parameters, you only need 6.5 GB to load the model. With an RTX 3060 12 GB, you would be able to run batch decoding/fine-tuning but again, I rather recommend the RTX 4060 16 GB which is a much better deal now.

48 GB of CPU RAM could be enough but I recommend 64 GB. 64 GB is more standard than 48 GB. You can easily find 32 GB *2 memory kits, leaving free two RAM slots on your motherboard for later upgrades.

For this configuration, you need a powerful CPU:

14B+ parameters

Examples of models: EleutherAI/gpt-neox-20b, meta-llama/Llama-2-70b-hf, tiiuae/falcon-180B

Running 14B+ models on a budget is challenging but not impossible. For instance, using consumer GPUs, 2 RTX 4090 24 GB give you a total of 48 GB of VRAM, which is enough for models with up to 20B parameters.

GIGABYTE GeForce RTX 4060 Ti Gaming OC 16G Graphics Card, 3X WINDFORCE Fans, 16GB 128-bit GDDR6 (x2, giving 32 GB of VRAM)

GIGABYTE GeForce RTX 4090 Gaming OC 24G Graphics Card (x2, giving 48 GB of VRAM, but check that your motherboard supports two big GPUs and that your power supply delivers enough watts)

As an alternative, you can use a professional GPU with more VRAM than the consumer GPUs. It may be cheaper than two RTX 4090 for instance.

Then, you can split the model so that a part is loaded into the VRAM and the remaining parts into the CPU RAM. In this case, keep in mind that fine-tuning will be quite slow but feasible.

You can install a lot of CPU RAM in your computer. In a previous article, I described how to run a 4-bit Falcon 180B with 128 GB of CPU RAM.

To process extremely large LLMs, you need a high-end CPU:

Cloud Computing with Notebooks

Paperspace

Paperspace has a large choice of GPUs and it’s very easy to use. I use it to draft my notebooks before exporting them to Google Colab.

GPUs are more expensive per hour than Google Colab but you won’t get disconnected when you run long processes.

Creating a PaperSpace account is free. If you use my referral code you get $10 credits (more than 10 hours of computing using a good GPU, enough to fine-tune LoRA adapters for instance). Click here to open a Paperspace account and get the $10 credits:

Google Colab

I use Google Colab a lot but I can’t recommend it for other purposes than writing tutorials or very small experiments.

Google Colab is not meant for running long processes. When I fine-tune models with the A100 of Google Colab+, my notebook often gets disconnected from my Google Drive where I save checkpoints.

The choice of GPUs is also very limited: T4, V100, and A100. Only the A100 runs the Ampere architecture.

Google Colab has also one of the cheapest usage costs. For instance, it costs around $1 per hour for an A100 with 40 GB of VRAM and 80 GB of CPU RAM.

There is also a free instance of Google Colab. It’s limited to a T4 GPU with 13 GB of VRAM and 12 GB of CPU RAM but this is enough to run the model with 7B parameters, quantized.

The Kaitchup – AI on a Budget is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.