This article summarizes my previous articles on fine-tuning and running Llama 2 on a budget. I heavily rely on quantization but without sacrificing performance by adopting the best practices and hyperparameters known to date.

I’ll pin this article on The Kaitchup homepage and I’ll keep updating it with new open datasets, better hyperparameters, etc.

If you have any suggestions, or information, that you would like to be added, please leave a comment.

How to get Llama 2

You must register to get it from Meta. The form to get it is there. You should receive an email from Meta within one hour.

Then, since I’ll use Hugging Face Hub, you will also need to create a Hugging Face account. The email address you used to create this account must be the same email that you used to get the Llama 2 weights.

Then, go to a Llama 2 model card, and follow the instructions (you should be logged in to your account and see a checkbox to check and a button to click at the top of the model card). This step takes more time, but you should get access to Llama 2 on the Hugging Face hub within 1 day.

You must also create an access token from your Hugging Face account. Go to “settings” in your Hugging Face account and generate one.

You will get access to the following models:

These are the Llama 2 models converted by Hugging Face to be easily used with Hugging Face libraries. If you want the original versions released by Meta, remove the “-hf“ in the URLs.

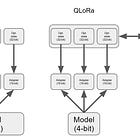

Cheap fine-tuning of Llama 2 with QLoRa

To fine-tune Llama 2 with QLoRa, follow the instructions in this tutorial:

Hardware requirements

Note: This section contains Amazon affiliate links. If you buy anything on amazon.com after clicking on these links, I will earn a commission.

These requirements can be slightly lower if you don’t do batching, e.g., it should be possible to fine-tune Llama 2 7B on 8 GB of VRAM without batching.

Llama 2 7B:

10 GB of VRAM

10 GB of CPU RAM, if you use the safetensors version, more otherwise

Examples of minimum configuration:

RTX 3060 12 GB (which is very cheap now) or more recent such as RTX 4060 16 GB.

Google Colab free

I have an RTX 3060 12 GB and I can say it’s enough to fine-tune Llama 2 7B (quantized). I bought it in May 2022. The RTX 4060 16 GB looks like a much better deal today: it has 4 GB more of VRAM and it’s much faster for AI for less than $500.

Llama 2 13B:

24 GB of VRAM

24 GB of CPU RAM, if you use the safetensors version, more otherwise

Example of minimum configuration:

RTX 3090 24 GB or more recent such as the RTX 4090

The RTX 3090 is nearly $1,000. The RTX 4090 has the same amount of memory but is significantly faster for $500 more. Note: These cards are big. Check that you have enough space in your computer.

As for the CPU RAM, it’s more often sold as a pair of 2 modules of 16 GB each, for less than $100, such as the Corsair Vengeance 32 GB.

Hyperparameters for Fine-tuning

The quantization hyperparameters are standard. For LoRa and SFTTrainer hyperparameters, I recommend the ones used by Platypus.

Quantization hyperparameters

compute_dtype = getattr(torch, "float16")

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=compute_dtype,

bnb_4bit_use_double_quant=True,

)These are very standard hyperparameters for fine-tuning with QLoRa. You may set bnb_4bit_use_double_quant to False to speed up fine-tuning if you have enough VRAM.

LoRa hyperparameters

peft_config = LoraConfig(

lora_alpha=16,

lora_dropout=0.05,

r=16,

bias="none",

task_type="CAUSAL_LM",

target_modules= ["gate_proj", "down_proj", "up_proj"]

)These hyperparameters are the ones used by Platypus. I confirm that they fine-tune better adapters than the hyperparameters proposed in Meta’s llama recipes.

SFTTrainer hyperparameters

training_arguments = TrainingArguments(

output_dir="./results",

evaluation_strategy="steps",

do_eval=True,

per_device_train_batch_size=4,

gradient_accumulation_steps=8,

per_device_eval_batch_size=4,

log_level="debug",

save_steps=100,

logging_steps=50,

learning_rate=4e-4,

eval_steps=200,

fp16=True,

num_train_epochs=1,

warmup_steps=100,

lr_scheduler_type="cosine",

)

trainer = SFTTrainer(

model=model,

train_dataset=dataset['train'],

eval_dataset=dataset['test'],

peft_config=peft_config,

dataset_text_field="text",

max_seq_length=512,

tokenizer=tokenizer,

args=training_arguments,

)Again, I apply the hyperparameters proposed in Platypus.

You may use packing (packing=True) if you have a large instruction dataset (>100k examples) to speed up fine-tuning. If your dataset has very long triplets {instruction, question, answer}, packing won’t be useful, since you won’t be able to pack several examples in the same sequence. In this situation, I would recommend setting it to False.

Fast and lightweight inference with Llama 2

Hardware requirements

The requirements are similar to the ones I indicated for fine-tuning. If you plan to do batch inference, more VRAM will allow you to create bigger batches. More VRAM also means that you can use a larger beam size.

If you won’t be able to do batch inference for your task, then using a more expensive GPU won’t give you any advantage. A low-end consumer GPU with 12 GB of VRAM is more than enough.

If speed is not a concern, you may only use your CPU with a framework such as llama.cpp or llama2.rs.

To sum up:

Batch inference: More VRAM for faster inference

No batch inference: Any GPU that can load the model

If speed is not a concern: A (preferably) high-end CPU (Intel i9 for instance)

Inference hyperparameters

There are no standard hyperparameters that will work for all tasks. You will always need to adjust to obtain better results. I recommend to start with something like this for chat applications:

generation_config=GenerationConfig(temperature=0.9, top_p=0.7, top_k=40, num_beams=10)If you find that the output has too many repetitions, you can add “repetition_penalty“ and set it to a value greater than 1, for instance, 1.1.

For other applications for which we don’t need to promote token diversity, such as paraphrasing or translation, I recommend removing the hyperparameters temperature, top_p, and top_k. It will deactivate sampling. You may also set do_sampling to False.

Llama 2 Post-Training Quantization

Quantization with GPTQ reduces the model size of Llama 2 7B by 70%, to almost 4 GB.

You can achieve this quantization directly with transformers:

from transformers import AutoModelForCausalLM, AutoTokenizer, GPTQConfig

model_id = "meta-llama/Llama-2-7b-hf"

tokenizer = AutoTokenizer.from_pretrained(model_id, use_fast=True)

quantization_config = GPTQConfig(bits=4, dataset = "c4", tokenizer=tokenizer)

model = AutoModelForCausalLM.from_pretrained(model_id, device_map="auto", quantization_config=quantization_config)Llama 2 Padding

tokenizer.padding_side = "left"

tokenizer.pad_token = tokenizer.unk_tokenMore information on padding Llama 2 in this article:

License limitations

Llama 2 license has only one major limitation that you should be aware of:

v. You will not use the Llama Materials or any output or results of the

Llama Materials to improve any other large language model (excluding Llama 2 or derivative works thereof).

Open Datasets

Here is a list of instruction datasets that you can use for fine-tuning Llama 2:

OpenAssistant Conversations Dataset (OASST1) (84.4k training examples)

OpenOrca (4.2M training examples)

openassistant-guanaco (9.8k training examples)

databricks-dolly-15 (15k training examples)

All these datasets are distributed with a license allowing commercial use.

Why not target the attention k, v and q? In LoRA