Simple, Fast, and Memory-Efficient Inference for Mistral 7B with Activation-Aware Quantization (AWQ)

Using AWQ models with Hugging Face Transformers

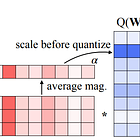

Quantization can significantly reduce the size of large language models (LLMs). Among the popular methods for quantization, activation-aware quantization (AWQ) has several advantages:

The quantization to 4-bit and lower precisions is very accurate

The models quantized with AWQ are faster for inference than models quantized with other methods

I explained in the following article how AWQ works and how to use it to quantize Llama 2 (using AutoAWQ):

AWQ is now even easier to use thanks to its support by Hugging Face Transformers. We can now directly load and use AWQ models from the Hugging Face Hub.

In this article, we will see how to use AWQ models for inference with Hugging Face Transformers and benchmark their inference speed compared to unquantized models.

For the experiments presented in this article, I use my own 4-bit version of Mistral 7B made with AutoAWQ. You can find it here:

I also implemented a notebook showing how to use AWQ in Hugging Face Transformers (in the appendix of the notebook, there is also the code to quantize Mistral 7B with AutoAWQ):

Hardware Requirements

For inference with Mistral 7B quantized to 4-bit, we need at least 7 GB of GPU memory. This would be enough to load the model and for inference without batch decoding.

For examples of hardware configurations, have a look at this page:

If you use Google Colab, any of the GPUs available would work.

Load Mistral 7B AWQ

AWQ models are supported by Hugging Face Transformers but only for inference. We can’t quantize a model using the AWQ method directly with Transformers. I use AutoAWQ for quantization because it’s very easy to use and the quantization pipeline is similar to the one implemented in AutoGPTQ which I also use.

However, AutoAWQ has limited features. For instance, it only supports quantization in 4-bit. For quantization in lower precision, we can use llm-awq which is the implementation proposed by the authors of AWQ.

Transformers officially supports models quantized by AutoAWQ and llm-awq. You can find several AWQ models on the Hugging Face hub but make sure that the file “config.json” has a field “quantization_config”. Transformers need this information to properly load the model.

The quantization_config should look like this (extract from the config.json of the AWQ version of Mistral 7B):

"quantization_config": {

"bits": 4,

"group_size": 128,

"quant_method": "awq",

"version": "gemm",

"zero_point": true

},Before loading the model, we must install AutoAWQ. If you have CUDA 12, you only have to run:

pip install autoawqBut if you have CUDA 11, install this version instead (only this version works in Google Colab):

pip install https://github.com/casper-hansen/AutoAWQ/releases/download/v0.1.6/autoawq-0.1.6+cu118-cp310-cp310-linux_x86_64.whlThen, you can load the model as you would with any other model:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained('kaitchup/Mistral-7B-awq-4bit', use_fast=True)

model = AutoModelForCausalLM.from_pretrained('kaitchup/Mistral-7B-awq-4bit', device_map="cuda:0")When it reads the config.json, Transformers automatically detects that it is an AWQ model.

We can make sure that the model is quantized by printing it:

print(model)MistralForCausalLM(

(model): MistralModel(

(embed_tokens): Embedding(32000, 4096)

(layers): ModuleList(

(0-31): 32 x MistralDecoderLayer(

(self_attn): MistralAttention(

(q_proj): WQLinear_GEMM(in_features=4096, out_features=4096, bias=False, w_bit=4, group_size=128)

(k_proj): WQLinear_GEMM(in_features=4096, out_features=1024, bias=False, w_bit=4, group_size=128)

(v_proj): WQLinear_GEMM(in_features=4096, out_features=1024, bias=False, w_bit=4, group_size=128)

(o_proj): WQLinear_GEMM(in_features=4096, out_features=4096, bias=False, w_bit=4, group_size=128)

(rotary_emb): MistralRotaryEmbedding()

)

(mlp): MistralMLP(

(gate_proj): WQLinear_GEMM(in_features=4096, out_features=14336, bias=False, w_bit=4, group_size=128)

(up_proj): WQLinear_GEMM(in_features=4096, out_features=14336, bias=False, w_bit=4, group_size=128)

(down_proj): WQLinear_GEMM(in_features=14336, out_features=4096, bias=False, w_bit=4, group_size=128)

(act_fn): SiLUActivation()

)

(input_layernorm): MistralRMSNorm()

(post_attention_layernorm): MistralRMSNorm()

)

)

(norm): MistralRMSNorm()

)

(lm_head): Linear(in_features=4096, out_features=32000, bias=False)

)We can see in the output that all the modules are quantized with “WQLinear_GEMM”.

Inference with Mistral 7B AWQ

For inference, we can use AWQ models as any other model. For instance, with TextStreamer, it is as simple as this:

from transformers import TextStreamer

inputs = tokenizer(["Gravity is "], return_tensors="pt").to('cuda')

streamer = TextStreamer(tokenizer)

# Despite returning the usual output, the streamer will also print the generated text to stdout.

output = model.generate(**inputs, streamer=streamer, max_new_tokens=200)

print(output)It should yield the following output:

<s> Gravity is 100% a movie that you should see in theaters. It’s a movie that you should see in IMAX. It’s a movie that you should see in 3D. It’s a movie that you should see in 3D IMAX. I’m not going to spoil anything, but I will say that the movie is a visual spectacle. It’s a movie that you should see in 3D IMAX. I’m not going to spoil anything, but I will say that the movie is a visual spectacle. It’s a movie that you should see in 3D IMAX. I’m not going to spoil anything, but I will say that the movie is a visual spectacle. It’s a movie that you should see in 3D IMAX.

The beginning of the generated text looks relevant and fluent but then the model repeats itself over and over until “max_new_tokens” is reached. This is the expected behavior since Mistral 7B, like Llama 2, has been pre-trained without an EOS token. In other words, Mistral 7B doesn’t know when to stop generating. We would have to fine-tune the model with an EOS token to teach it when to stop.

In another article, I’ll show you how to properly benchmark inference speed with optimum-benchmark, but for now let’s just count how many tokens per second, on average, Mistral 7B AWQ can generate and compare it to the unquantized version of Mistral 7B. Note: I used the A100 GPU of Google Colab to be able to load the unquantized version of Mistral 7B with bfloat16.

I measured it as follows for the AWQ version:

import time

prompts = ["Gravity is a force ", "Gravity is ", "The recipe is", "Mistral is ", "The best movie is "]

duration = 0.0

total_length = 0

for p in prompts:

inputs = tokenizer([p], return_tensors="pt").to('cuda')

start_time = time.time()

# Despite returning the usual output, the streamer will also print the generated text to stdout.

output = model.generate(**inputs, max_new_tokens=1000)

duration += float(time.time() - start_time)

tok_sec_prompt = round(len(output[0])/float(time.time() - start_time),3)

print("Prompt --- %s tokens/seconds ---" % (tok_sec_prompt))

total_length += len(output[0])

tok_sec = round(total_length/duration,3)

print("Average --- %s tokens/seconds ---" % (tok_sec))It should print the following average (or a close number):

Average --- 22.399 tokens/seconds ---The memory consumption peaked at 6.2 GB.

With the unquantized version, the memory consumption peaked at 15 GB. The average speed is 25.46 tokens/second, i.e., 12% faster than the AWQ version.

Conclusion

Using AWQ models for inference has never been easier. Mistral 7B quantized with AWQ weighs only 4.2 GB on the hard drive and only consumes 6.2 GB of VRAM for inference (without batch decoding).

Despite the quantization, the model is only 12% slower than the original model with bfloat16 parameters.

AWQ models are memory-efficient and faster than GPTQ models but they still lack fine-tuning support by the main deep learning frameworks. For instance, while it is possible to add LoRA adapters to fine-tune GPTQ models, e.g., using the PEFT library, this is still impossible for AWQ models. They can’t be fine-tuned.