Hi Everyone,

In this edition of The Weekly Kaitchup:

A New Zephyr 7B Based on Gemma

PEFT: LoRA with AWQ and AQLM

Genstruct 7B: Generate an Instruction Dataset from Raw Text

The Kaitchup has now 2,391 subscribers. Thanks a lot for your support!

If you are a free subscriber, consider upgrading to paid to access all the notebooks and articles. There is a 7-day trial that you can cancel anytime.

A New Zephyr 7B Based on Gemma

Hugging Face has fine-tuned and aligned Google’s Gemma 7B, following a recipe similar to the one they used to train their original Zephyr.

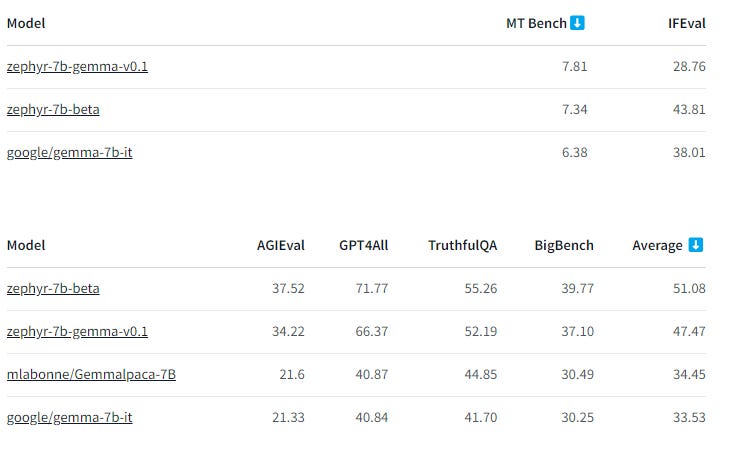

According to the standard public benchmarks, this new Zephyr is not as good as the original one based on Mistral 7B. However, it performs better on the MT Bench.

Nonetheless, this release is informative as many have reported difficulties in fine-tuning Gemma 7B. This recipe published by Hugging Face seems to work relatively well and can be a good starting point.

This recipe is available here:

The model is on the Hugging Face Hub:

Note also that several bugs have been identified in the Gemma models released on the Hugging Face Hub. They are currently correcting them. I assume the Gemma models will be easier to fine-tune (with more stable learning curves) in the coming weeks. Meanwhile, if you want to fine-tune Gemma, I recommend using unsloth which has already implemented many corrections.

PEFT: LoRA with AWQ and AQLM

The last update of PEFT (0.9.0) supports fine-tuning LoRA adapters on top AWQ and AQLM quantized models. Note: GPTQ models were already supported.

To make it work, in principle, you only have to load the model without passing any quantization_config. The quantization configuration will be automatically retrieved from the config.json in the model’s directory.

However, be aware that if you fine-tune LoRA on top of a model quantized with these methods, we can’t merge the adapter into the model once fine-tuned.

Next week, I’ll probably write a tutorial about fine-tuning AQLM models and report on how well it performs. If it works, it could be a very cheap way to fine-tune Mixtral-8x7B on consumer hardware.

Genstruct 7B: Generate an Instruction Dataset from Raw Text

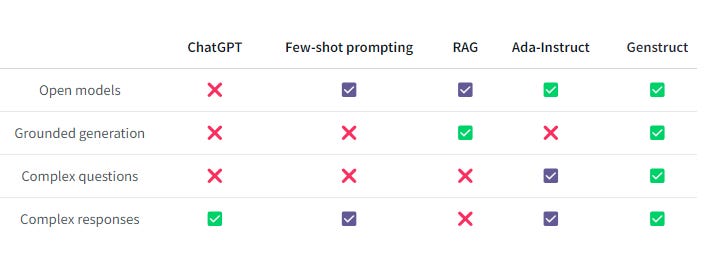

Fine-tuning an instruct/chat LLM on a particular domain with specific knowledge can be a very difficult task as we usually lack the data for this fine-tuning.

There are many methods to generate synthetic instruction datasets, but none of them are easy to set up and able to generate complex and long instructions.

With Genstruct 7B, it seems much simpler. It is an LLM, based on Mistral 7B, fine-tuned to generate instruction datasets from raw text. To make it, Nous Research follows an approach similar to Ada-instruct:

Ada-Instruct: Adapting Instruction Generators for Complex Reasoning

Genstruct 7B is available on the HF Hub:

HF Hub: NousResearch/Genstruct-7B

The Salt

In The Salt this week, I published a long review of LongRope. LongRoPE is a new extension of RoPE which is much more robust to model extremely long contexts. I explain how LongRoPe works for extending the context size of LLMs to 2 million tokens.

LongRoPE: Towards Unlimited Context Length for the Transformer

Evergreen Kaitchup

In this section of The Weekly Kaitchup, I mention which of the AI notebook(s) I have checked and updated, with a brief description of what I have done.

This week I have updated the notebook implementing the fine-tuning of Mistral 7B on consumer hardware, using TRL and QLoRA.

#22 Fine-tune Mistral 7B on Your Computer with QLoRa and TRL

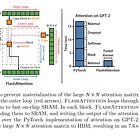

To sum up, I checked that the notebook was still running properly and added the support of FlashAttention-2, used a longer maximum sequence length, and used bfloat16 instead of float16, among other less significant changes. All these changes significantly accelerate and improve the fine-tuning.

The article has also been updated to reflect these changes:

That’s all for this week.

If you like reading The Kaitchup, consider sharing it with friends and coworkers:

Have a nice weekend!

Heh, when I click the Kaitchup logo in your emails, it takes me here: https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F1c0a353f-e88c-4615-90e0-cbab7d1afbaa_1660x1030.png?utm_source=substack&utm_medium=email