ChatGPT is a chatbot developed by OpenAI. It is based on instructGPT: It has been trained to follow and answer instructions, or so-called “prompts,” written by users.

ChatGPT demonstrates impressive abilities in providing coherent and relevant detailed answers to user prompts. It seems to perform especially well at natural language processing (NLP) tasks such as summarization, question answering, language generation, and machine translation.

However, since it is a very recent system, ChatGPT remains to be properly evaluated scientifically to compare its NLP performance with previous work.

Towards that direction, Tencent AI published a preliminary study on ChatGPT’s ability to translate:

Is ChatGPT A Good Translator? A Preliminary Study by Wenxiang Jiao, Wenxuan Wang, Jen-tse Huang, Xing Wang, and Zhaopeng Tu (Tencent AI)

The main objective of this study is to evaluate ChatGPT for translating text into English since most of its training data is in English. Note: Indeed, ChatGPT is based on instructGPT, as mentioned in the blog post. InstructGPT is GPT-3 fine-tuned with prompts “mostly in English” (Ouyang et al., 2022). Moreover, 93% of GPT-3’s pre-training data is English (Brown et al., 2020).

They also evaluate translation into other languages that are much less represented in its training data, such as Japanese and Romanian, and thus more challenging.

In this article, I will analyze and explain their main findings, especially to highlight what seems to work and what doesn’t when using ChatGPT as a machine translation system.

Translation Prompt

When dealing with generative language models, one of the most important steps is prompt design.

We need to find an appropriate natural language formulation to query the model given our target task. Here we want ChatGPT to translate a sentence in a source language, denoted “[SRC],” into a target language, denoted “[TGT].”

To find good prompts, Tencent AI directly asked ChatGPT to give 10 prompts, with the following prompt:

Provide ten concise prompts or templates that can make you translate.

ChatGPT returned as expected 10 prompts, but with only a few differences between them. They finally decide to try only the following 3 which are the most representative of the 10 prompts initially returned by ChatGPT:

Prompt 1: Translate these sentences from [SRC] to [TGT]:

Prompt 2: Answer with no quotes. What do these sentences mean in [TGT]?

Prompt 3: Please provide the [TGT] translation for these sentences:

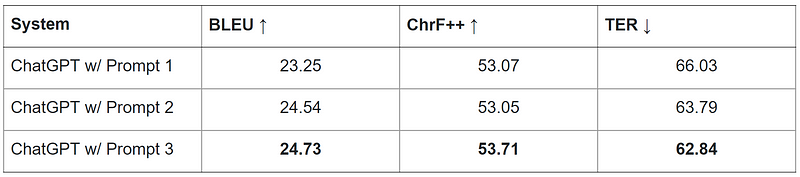

They evaluated each one of these prompts on a Chinese-to-English translation task ([SRC]=Chinese, [TGT]=English), and obtained the following results:

BLEU, chrF++, and TER are 3 automatic metrics for evaluating machine translation quality. With BLEU and chrF++, higher scores are better. With TER, lower scores are better.

Based on the scores obtained with these 3 metrics, they found that Prompt 3 performs the best. Prompt 2 seems also better than Prompt 1, even though chrF++ scores look similar.

This is interesting because Prompt 1 mentions the source language but the other two prompts don’t. Yet, Prompt 1 underperforms. ChatGPT doesn’t need to know the language of the text we want to translate.

This is impressive but also counter-intuitive. We could have expected ChatGPT to be more accurate thanks to the precision of the source language in its prompts. For human translators, knowing the source language is critical.

Currently, there is no good explanation for why ChatGPT yields lower scores when indicating the source language. We can assume that ChatGPT can automatically infer the source language from the user input. If this is the case, providing the source language shouldn’t have any impact, instead of the negative impact observed in Tencent AI results.

General translation

Now that we have found a good prompt, we can evaluate ChatGPT against state-of-the-art machine translation systems.

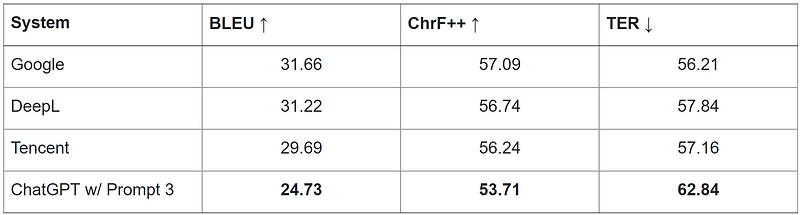

Tencent AI chose online systems for comparison: Google Translate, DeepL, and their own online system, Tencent TranSmart.

The results are as follows:

The three online systems perform similarly and seem to perform better than ChatGPT, even though the authors don’t report on statistically significant testing to make sure that the differences are really significant.

Yes, We Need Statistical Significance Testing

A rule of thumb may yield correct results but can’t be scientifically credible

Yet, I found these results impressive. Being based on instructGPT, we can assume that ChatGPT is mainly trained on English data, but seems able to capture the meaning of Chinese sentences well enough to generate English translations.

If we could fine-tune ChatGPT for Chinese-to-English, we would definitely obtain a translation of a much higher quality.

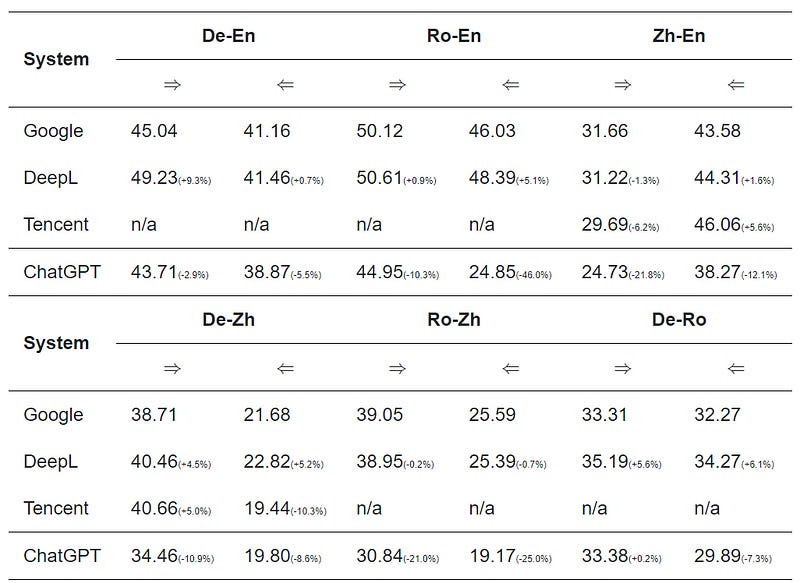

In the paper, Tecent AI also reports on similar differences in all translation directions between English, Chinese, German, and Romanian.

Again, the performances (in BLEU) are impressive. Even for translation directions that don’t involve English, such as German-to-Chinese, ChatGPT can generate translations. According to BLEU, online systems remain better, as expected since they are trained for this task. ChatGPT isn’t!

Results involving Romanian are quite different. For instance, the BLEU score is almost 50% lower for ChatGPT compared to the online systems. This difference is probably statistically significant.

The authors propose an explanation. Romanian is a language for which far fewer resources, e.g., Romanian text on the Internet, are available than for German and Chinese. ChatGPT may have seen during its training too few examples of Romanian sentences to accurately model them.

I would agree with this assumption, but it should be confirmed with more experiments involving other languages with similar amounts of resources, such as Croatian or Polish.

Domain and Robustness

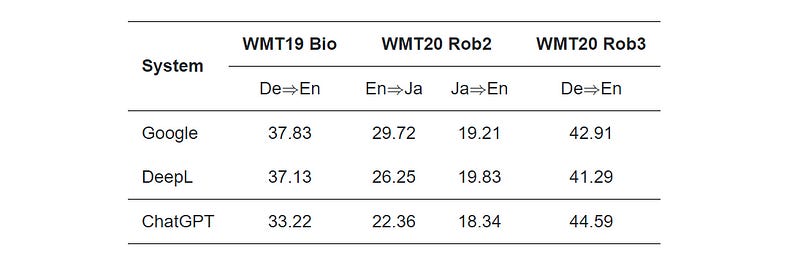

They carried out further experiments to evaluate the performance of ChatGPT in translating texts in a specific domain (biomedical) and user-generated (posted on social media, usually very noisy with grammatical errors).

Surprisingly, the performance of ChatGPT remains close to online systems for translating biomedical texts from German-to-English, according to BLEU.

ChatGPT does not seem to be negatively impacted by the very specific terms used in biomedical texts.

ChatGPT outperforms online systems in translating user-generated texts from German to English. This is impressive but less surprising. We can assume that ChatGPT has a lot of social media posts in its training data (crawled from the Web), while the online systems training data used for comparison are usually heavily curated, and thus somewhat less robust to errors (grammatical, semantic, etc.).

This task is much more difficult for ChatGPT when translating into languages distant from English, such as Japanese as shown by the results on WMT20 Rob2, as expected.

Limitations of this study

The authors acknowledge in their study that more experiments with more language pairs are necessary to better assess ChatGPT’s translation quality.

This assessment should be performed with human evaluation rather than with automatic metrics that are often inaccurate, especially when the scores of the systems compared are very close.

The lack of human evaluation is the main limitation of this work.

In my opinion, the impact of the prompt could be also further investigated. The authors chose a very original way by letting ChatGPT itself suggest prompts. But prompting ChatGPT to suggest prompts is a chicken and egg problem. The prompt itself used to get prompts for machine translation may have a strong impact on all the following experiments performed in this study. Previous work on prompt designing for machine translation tried very diverse and handcrafted prompts.

How Good Is Google PaLM at Translation?

Not that good, but we are getting there

Conclusion

ChatGPT is impressive at machine translation.

From this preliminary study, we can already conclude that ChatGPT would be good, and probably even better than standard online systems, at translating text for which the translation is expected to have the characteristics of ChatGPT’s training data, for instance, noisy user-generated texts in English.

Yet, as expected, ChatGPT is still behind more standard machine systems for translating into languages other than English, especially distant or low-resource languages, such as Japanese or Romanian.